Today, web3.storage’s architecture stores data in multiple places that each provide different functionality, from fast reads across IPFS and HTTP to decentralized availability via Filecoin. Each of these places are associated with a different set of infrastructure. The awesome thing about IPFS’s content addressing is that, as a user, you can reference and access your data using the same content identifier (CID) regardless of the various places where your data is stored.

But what does “running IPFS infrastructure” actually mean? Why did we make the infra decisions we did, and how do they compare to the alternatives that other hosted IPFS storage providers might decide on across dimensions that matter to you, like cost, reliability, performance, and scalability? In this post, we discuss the different approaches that one might take when running IPFS infrastructure, and why we chose ours.

Architecture options

There are various implementations of IPFS one can run for a hosted service, and structures of how they are run. When reading through these options, it’s important to keep in mind that these options are not mutually exclusive (since content addressing allows you to access data from the network using the same identifier). Even for web3.storage, the reality is we use a mixture of the below options depending on the use case. These options are also not exhaustive for the future, but represent much of what we see today.

One note before really diving in: for the vast majority of developers and web users out there looking to utilize IPFS, we think the right choice is to use a hosted service like web3.storage. This allows users to avoid having to worry about the burden of running and scaling their own infrastructure. In this post, as we dive into the different IPFS infrastructure options, we evaluate things in the context of running such a hosted service.

Hosted Kubo nodes

Kubo (fka go-ipfs) is the reference implementation of IPFS. It’s the most common one - for instance, when you install IPFS Desktop, you’re installing a Kubo node that runs on your computer. It runs in Go, so it’s a natural candidate for building a hosted service around. The first versions of web3.storage, and its sister product, NFT.Storage, were built on Kubo, so we’ve experienced first-hand the pros and cons of building a managed service around it.

Kubo has some great advantages, generally at smaller levels of scale.

-

It comes built-in speaking both the native IPFS protocol, bitswap, and HTTP.

- Bitswap allows users to fetch content in a trustless and verifiable way by fetching the data block by block to build up the graph used to generate the CID (giving you all the pieces to check that the content you’re receiving matches the hash).

- HTTP, as the primary protocol used by internet users, allows billions of people and even more devices to use IPFS.

-

It’s super easy to set up and deploy, and since it’s the reference implementation, it gets consistent feature updates.

-

It is highly performant when the volume of data it is handling is small, especially relative to the specs of the infrastructure it runs on (e.g., disk space, CPU utilization). This also translates to its cost - it’s cost effective at smaller levels of scale, especially because you have the option to run it in your own infrastructure.

However, Kubo also comes with a number of downsides which especially become obvious at higher levels of scale.

-

It has high levels of overhead when processing uploads and making it available. This is particularly noticeable at higher levels of scale and utilization, when even simple uploads and reads can become unreliable.

- Kubo processes uploads to index the CIDs of the blocks to their locations.

- However, it also validates that the root CID of the graph corresponds to the entire upload - additional overhead that can be felt at scale.

- In addition, these processes are run on the same machine as all the other things going on. In a managed service context, this involves other users uploading data, any reads of the data, and more. Especially from a disk IO perspective, things can get choked up.

-

In the same vein, content discovery on the decentralized network can become spotty at scale.

- To let other IPFS nodes out there that they have a certain set of content, Kubo generally provides records to a distributed hash table (DHT) that tracks which nodes in the network have what content.

- Broadcasting of content to the DHT is less reliable as Kubo gets busier under load - .

- These issues can be mitigated if the node requesting content is peered with the node hosting content. However, as a hosted service provider, this can be difficult to rely on, since it requires your users’ nodes to always be up-to-date (and for Kubo to scale, new nodes have to be spun up - we discuss this more below).

-

Costs for running Kubo nodes can blow up as their usage grows because of the tax this overhead incurs on the hardware.

Scaling options

Given Kubo’s weaknesses as its usage grows, it’s useful to look at the options to scale it.

-

Dedicated Kubo nodes: Run a separate Kubo node for each large user

- Examples: Pinata, Infura

- Pros: Provisions nodes independently so traffic or utilization from one set of users is less likely to affect other users

- Cons: Incurs overhead to provision new nodes, underutilizes hardware since you have to provision extra space on drives, makes relying on peering more difficult (since each new node has a new peer ID) which can be especially problematic for 3rd party IPFS nodes like public gateways

-

Shared IPFS nodes: Orchestration / load balancing on top of a set of shared nodes (e.g., IPFS Cluster)

- Examples: Estuary (fork of Kubo), web3.storage and NFT.Storage before moving to Elastic IPFS

- Pros: Utilizes hardware closer to the limits before getting choked up (we saw this at about 50% disk utilization), generate new nodes less often making it easier to rely on peering (since new peer IDs are generated less frequently)

- Cons: Heavy traffic from one set of users can still affect other users, and in the worst case scenario, still lead to downtime

Elastic IPFS

We wrote and launched Elastic IPFS, a cloud-native IPFS implementation, in mid-2022. You can read more about this on the NFT.Storage blog.

A “cloud-native” design of IPFS led to the different components of an IPFS node being deployed on independent microservices.

-

Uploaded data is stored as CAR files at rest, which are just serialized IPFS DAGs, in cloud object storage.

- CAR files are generated client-side by users, meaning these users generate the CID locally, maintaining a trustless relationship between the user and Elastic IPFS.

-

Serverless workers jump into action when a new CAR file appears, indexing all the blocks to the CIDs in the CAR file.

-

This index is stored in a NoSQL key-value database like DynamoDB.

-

Records of which CIDs Elastic IPFS has are sent to

storetheindex, which other IPFS nodes like Kubo nodes can use for content discovery (in parallel with the DHT). -

A virtual server that appears as an IPFS peer to the rest of the network is set up to handle content requests over bitswap.

- When requests for content come in, serverless workers use the index to find where the blocks that correspond to the requested CIDs sit, and the virtual server sends the content to the requestor.

We found the advantages of using a cloud-native design to build a managed service around very compelling.

-

Processes are independent from a number of dimensions - hardware utilization, scalability, and load balancing, meaning traffic and utilization from one set of users generally doesn’t affect others.

- This also means that, as we grow as a service, we avoid the compounding overhead that Kubo nodes face.

-

These processes are often more efficient as well; for instance, indexing CIDs to blocks just happens without needing to validate that a complete graph of data has been uploaded.

-

Since we can run a “single” IPFS node from the perspective of the network, we have a single, stable peer ID that any 3rd party IPFS node can peer with for fast reads over bitswap.

Some downsides remain that are worth recognizing, though these matter far less at scale.

-

Because it is built on independent microservices, Elastic IPFS can have slightly worse read / write performance vs. Kubo nodes at small amounts of data stored. However, this remains stable as it grows.

- Since Elastic IPFS relatively new, there low-hanging performance improvements we are looking at to improve this.

-

It is natural for Elastic IPFS to be instrumented on public cloud infrastructure to avoid the overhead of managing and scaling your own hardware (for instance, web3.storage currently uses AWS and Cloudflare). However, this can have shortcomings.

-

In particular, data egress is expensive. However, because IPFS uses content addressing, we also are able to lean into the cloud providers that make the most sense by use case (e.g., utilize DynamoDB from AWS for indexing, but cheap egress from Cloudflare).

-

More generally, by building on top of public cloud services themselves, it’s makes it more difficult to disrupt them in some ways (e.g., can’t offer a lower cost). However, our take is that performance and reliability are the most important things to convincing developers with actual use cases to use these verifiable, hash-addressed protocols. And by leaning into the unique things that IPFS unlocks (e.g., no lock-in), they reap benefits both now and as the underlying tech improves.

- By getting users onto web3 protocols like IPFS now, they can seamlessly take advantage of the benefits of a more peer-to-peer and trustless world as they become increasingly competitive from a cost and performance perspective.

- For instance, we also store copies of all data on web3.storage data into Filecoin storage deals as well. This allows for verifiability of the physical storage of users’ data today, but should be viewed as more of a disaster-recovery backup of the data.

- In the future, as retrievability of data from Filecoin improves, users can rely more on the data as very cheap (in many cases, free), cold storage (with slightly higher latency than if the data is stored in Cloudflare).

-

You can read more about web3.storage’s current and future architecture plans in particular here.

-

How we got here

As mentioned, web3.storage and NFT.Storage used to be primarily based on Kubo nodes with an IPFS Cluster orchestrating things on top. This worked really well as our service first came to be. In particular, NFT.Storage first came together in just three weeks, so we needed something fast to get up and running!

After the services got popular, though, we started seeing major issues as we scaled, realizing that Kubo wasn’t necessarily designed for the use case of running a managed service provider. In the worst times, we were seeing a major outage every 1-2 weeks, and fixing these issues were more trial-and-error than best-practice debugging. As a result, we quickly got to work writing Elastic IPFS, incorporating the lessons we had learned.

Deploying Elastic IPFS had a huge impact on our service levels and performance! And we’ve continued to see things scale to over 5 billion blocks being available to the IPFS network.

We’ve also seen that it has been cheaper for us to run. We continued to run our old IPFS Cluster for a while. Even though didn’t make any new writes to it for many months, it cost significantly more to keep up than running Elastic IPFS.

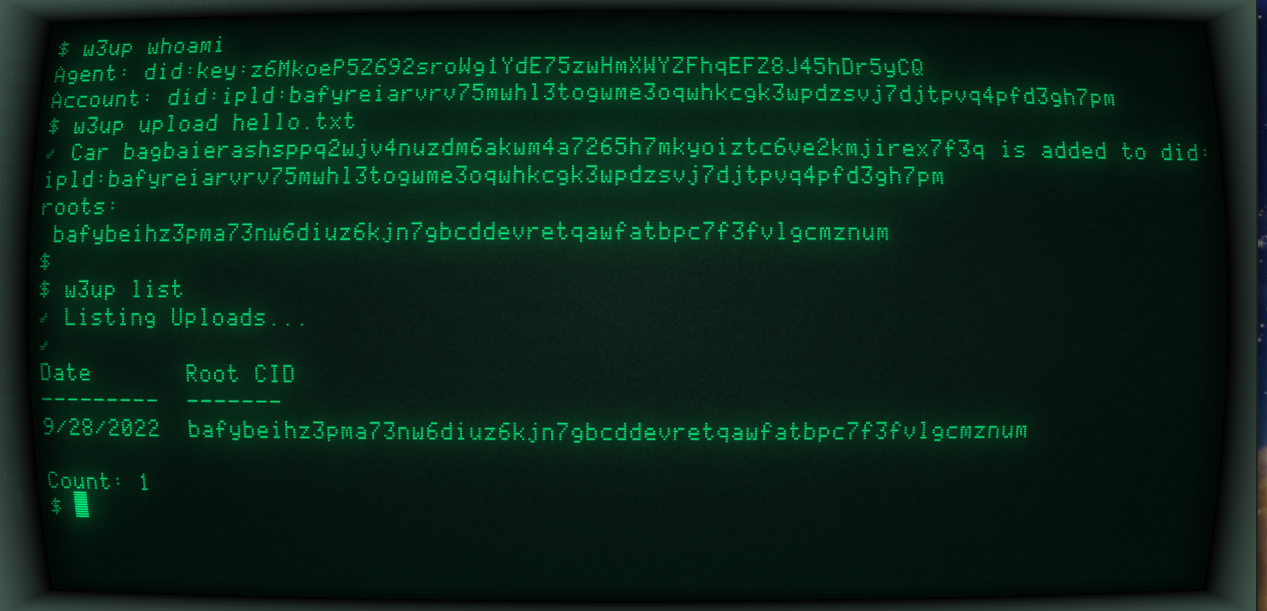

Finally, using a cloud-native structure sets us up for huge improvements coming soon. In particular, w3up, our new upload API, is only enabled because of Elastic IPFS’s architecture. We discuss the benefits of w3up at length in other blog posts, but can’t wait for the performance and creativity it will unlock.

We still use Kubo nodes in certain cases - for instance, with our Pinning Service API implementation since Kubo does well fetching content from the IPFS network. This illustrates how we can utilize IPFS to choose the best infrastructure for the use case.

Future: Iroh

The n0 team is hard at work with a new IPFS implementation called Iroh. It strips down a lot of what Kubo does to improve its performance and scalability.

We’re excited about the potential of Iroh. It is being designed to be able to be used in a variety of use cases, from running a local IPFS node (even on mobile!) to building a managed service around.

Whether web3.storage finds that Iroh functions better than Elastic IPFS for our needs, or we continue running Elastic IPFS, we can’t wait for a more peer-to-peer world where IPFS nodes are run on clients and backends everywhere, and think Iroh will be a big part of this.

How to utilize this information

It’s the best time in history to use decentralized data protocols like IPFS. There are an abundance of services and tutorials out there to help you get started with the beauty of content addressing, which will help create a better web for its users.

However, at the same time, we’re still in the first inning of how IPFS can be used! This is relevant when you decide what service provider to use. Some things we suggest taking a look at:

- How are they architected today? Will things hold up at scale for your use case, and as they grow as a service?

- What price do they offer today? Does it seem sustainable given their architecture? Do they have plans to become more / less price competitive over time?

As seasoned veterans at developing IPFS protocols and running IPFS infrastructure, our team has seen all sorts of things - both good and bad - as we’ve run web3.storage. By sharing our knowledge and experiences, we hope to help demystify IPFS to developers to make it easier for them to use it (while also getting them excited about its potential!).